Data Science on small sized datasets : Deriving sensible conclusions when you do not have sufficient evidence

Permutation test and Bootstrap resampling — Using non parametric methods when you do not know anything about the underlying distribution of the data.

Let us understand the context by taking 3 use cases here —

Case (1) — You have very few data points and You want a rough estimate of average heights of the male population in the district

You decide to carry out a survey and you have managed to collect only 20 data points where the male population of a district is around ~10 million

Let’s say the data points here represent the heights of the population in (cm) —

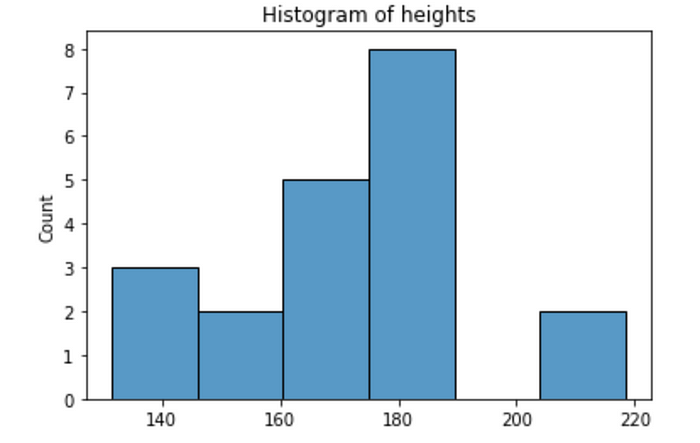

20 sample heights of male population (collected) - [181.7, 165.2, 178.93, 211.31, 138.33, 150.54, 173.77, 144.34, 185.1, 180.02, 173.56, 218.52, 187.16, 161.22, 164.39, 131.42, 180.98, 178.62, 157.1, 181.03]

From the above, the sample mean of the 20 observations above is 172.16 cm. But would you conclude that you are expecting heights of population to be 172.16 cm ? Not really, because you are too unsure about the small size of the data and wether this average height would translate to the entire population. You would be better off in giving an interval estimate of the average height with confidence bounds.

You can use Bootstrap sampling for this. The benefit of using bootstrap sampling over other distribution based techniques (like z-distribution) is that you do not need to know anything about the distribution of the underlying population. For example, In this case we know that heights follow a normal distribution, so z-distribution estimation of population would make sense but what if you do not know anything about the distribution. It’s better to use a non parametric approach like Bootstrap sampling in that case.

How Bootstrap sampling works ?

In the above case, You have 20 data points. Let’s take bootstrap sample size of 200 and number of bootstrap samples as 1000. What we do intuitively is, we randomly sample a number 200 times with replacement (with replacement refers that ) and create a bootstrapped sample array. Let’s call this bs_1. We repeat this process 1000 times and create bs_1, bs_2, …….bs_999, bs_1000. It’s upto you to decide what you want to use as your bootstrap sample size and number of bootstrap samples — Here I have used 200 and 1000 respectively.

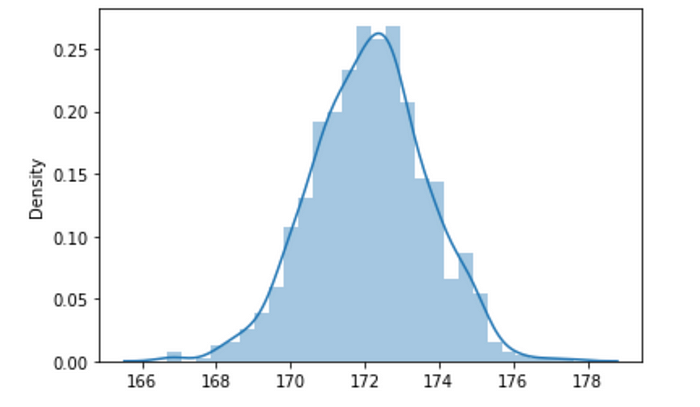

Now, take the means of all the 1000 bootstrap samples and plot the distribution of these Sample Means. You will get a Normal Distribution (as per Central Limit Theorem)

Now, Let’s say you want a 95% Confidence interval of the population heights, then just take the central 95% values (basically from 2.5 percentile upto 97.5 percentile).

As you can see, the range of your estimates of the population height increases as your Confidence increases. Because you are intuitively more confident that the population mean would lie in a higher bracket range than a lower bracket range. This type of interval estimates with confidence bounds are much better than giving out a point estimate as the average of the sample height that you saw with just 20 observations.

Code snippet for running the above —

Case (2) — ASYMMETRIC DATASETS. You just launched a new marketing campaign ‘X’ and have very less data points and want to check if Conversion rates % are higher than existing campaign ‘Y’ which has lot of historical data

Let’s say you already know from a ton of historical data that Campaign ‘X’ gets a conversion rate of 15% collected over let’s say 20000 data points which is enough evidence according to you to conclude a 15% conversion rate for Campaign ‘X’.

Now, you’ve recently launched an A/B experiment where a random unbiased traffic is assigned to Campaign ‘Y’ which say has actual theoretical conversion rate of 18% (which would eventually reflect when you have all 20000 data points ) — but so far you have only collected 500 data points so far and you want to have some conclusion on whether Campaign ‘Y’ is actually better than Campaign ‘X’. The problem is that this is too little data of 500 points for you to come to a conclusion and these 500 data points in Campaign ‘Y’ have given you a conversion rate of 22% (observed conversion rate) so far.

Actual Theoretical Conversion Rates % =

Campaign ‘X’ = 15% (over 20000 data points)

Campaign ‘Y’ = 18% (over 20000 data points as seen by an Oracle / God who knows the truth over the future)

Applying Bootstrapped sampling —

Now, what you can do is that you create arrays of size 500 (since campaign ‘Y’ has only 500 data points —this is the Bootstrap sample size) by doing random sampling with replacement from the campaign ‘X’ set. Now, repeat the same experiment 1000 times (Number of Bootstrap samples). Now, plot the distribution of the conversion rates % of each of these 1000 bootstrap samples. You get a Histogram something like this —

Now, you need to calculate the p-value.

To calculate p-value, We check at what ‘x’ percentile is the conversion rate of 22% (which is the observed conversion rate% of campaign ‘Y) lying in the bootstrapped sample conversion rate% distribution. We can see that it is somewhere around 93 percentile.

Hence, the p-value for this task is basically (1-xth percentile)% = 1–0.93 = 7% in this case. Now, generally we assume a 5% significance level (if you remember high school Math), we see that p-value doesn’t fall in the rejection region of Null Hypothesis.

Thereby we can conclude the following in English —

There is not much evidence based on the 500 data points collected on Campaign ‘Y’ above to suggest that the 22% observed Conversion rate on Campaign ‘Y’ is better than Campaign ‘X’ (15% observed conversion rate over 20000 data points) for a significance level of 5%

Imp Note —

- Your conclusion can change based on your changes in significance level (which is generally taken 5%)

- Had your p-value been < 5% => you could have concluced the opposite that Campaign ‘Y’ is better than Campaign ‘X’.

Code snippet for calculating p-value :

Case (3) — SYMMETRIC DATASETS. You have exam marks of 2 sets of papers for equal number of candidates. You want to check if one paper set was tougher than the other basis marks of students obtained.

Let’s take a case where class section ‘A’ has received the question set ‘A’ which has 50 students. Another class section ‘B’ has received the question set ‘B’ which also has 50 students and they give an exam of mathematics. Once the results came out, the students of class ‘A’ received an average marks of 59 whereas class ‘B’ students received an average marks of 61 with standard deviations as 10.8 and 11.4 for ‘A’ and ‘B’ respectively. The cutoff marks basis current grading system assures first division for marks > 60 and second division for marks < 60. Students of class ‘A’ feel unjust as they felt their question paper was slightly tougher compared to the class ‘B’ set. They demand justice from the principal of the school.

Here is the distribution of the marks obtained by class ‘A’ and ‘B’ students.

In order to check if there was a bias in the question papers, we carry out a resampling technique called as Permutation & Resampling test.

Applying Permutation and Resampling Test —

Let us assume number of bootstrap samples to be 10000 (number of permutations). First, we shuffle up and mix the marks of class ‘A’ of 50 students and ‘B’ of 50 students to form a global combined set of 100 marks. Then, we mix and shuffle these marks in a way so as to not be able to distinguish which marks belongs to which class and whom. Now, we randomly pick 2 sets of 50 each from the shuffled mixed set— let’s call them ms1 and ms2 and we measure the difference in the average marks of these 2 sampled sets — ms1 and ms2 and store it in an array msd. We repeat this process 10000 times and observe the distribution as follows —

Now, we basically try to calculate the p-value which would be the percentage of points lying above the observed mean difference in the 2 class sets of 61–59 = 2 marks. We see that this number turns out to be 0.22 (basically 11% of points lie > 2 and 11% of points lie < 2) since this is a 2 tailed test. But our rejection criteria was set at 5% based on significance level. So, we cannot reject our null hypotheses which is that there is no systemic bias in the exam question papers.

Thus, we can conclude in English that —

There is not much enough evidence from the data to suggest that the marks obtained from set ‘A’ students are lower compared to marks obtained from set ‘B’ students and any difference in the average marks obtained between the 2 sets is merely a random coincidence and not necessarily due to a systemic bias based on the 100 limited data points that we have in this situation (assuming a 5% significance level)

Code snippet for permutation test —

Final Notes-

- These are not fool proof methods. But these are better than having a single eyed view of the data.

- Significance level can vary case to case basis and context specific.

- Be careful to include rejection region in p-value basis your choice of test — wether you plan to have a one tailed test (or) a two tailed test. One tailed test validates a unidirectional hypotheses where s1>s2 is hypothesized. Two tailed looks for both s1>s2 and s1<s2 and cuts the rejection regions on both sides of the normal distribution equally.

Connect-

Feel free to connect for discussions.

Linkedin — https://www.linkedin.com/in/debayan-mitra-63282398/