Data Science : The correct way to Slice and dice your data to gain the best insights automatically

Using Decision Trees and understanding how they work for Automated Insights generation

Table of Contents

1. An Example — Data Use Case

2. How do you derive insights ?

3. How many Total ways to slice & dice your data ?

4. How do we humans decide data segments — whatever gives us more Information !

5. Machines can do the same process of creating data segments — Decision Tree to the rescue

1) An Example — Data Use Case

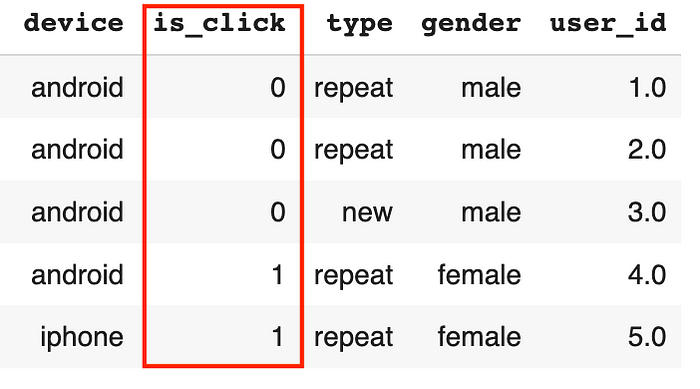

Let us take a simple dataset to understand the case here.

Let each row represent a user and we also have the user attributes information for device, type, gender information about each user. The is_click attribute specifies whether they have clicked on the product or not when it was presented to them. We will take only 1 product here to keep things simple. 0 represents no interaction with a product. Our job is to find some insights on how different attributes of a user behave/interact differently with the product. The metric that we can use to track the user interaction with the product is Click through rate % (CTR%). The CTR% of the overall dataset in this case is about ~45%.

2) How do you derive insights ?

Now, you start the slicing & dicing of the data. But you face the following challenges — how should you start ?

Let’s just say you start with the first attribute — device and you see the following. You clearly understand that iphone users have much seperate behavior than android users. This seperate behavior (differential behavior) becomes an insight for you. Now, you many choose to make certain decisions basis this insight obtained.

But what about the other attributes ? — say user type.

Now, you see difference here as well. But in which insight are you more enthralled ? Obviously the first one where you have segmented by device instead of type. And why is that ? Because you get a lot more information in the first case than second where the difference in CTRs is not so significant.

Okay, But what if you have to look at the data in 2 different attributes combination — say user’s (type x gender) combination.

Now, you obtain an insight here as well. You have seen that new vs repeat users have a CTR% difference but within them -> the male/female have a much larger difference within them as well. This is an insight that you are definitely enthralled about.

But this won’t be it. What if you want to look at all the 3 attributes in combination — (type x gender x device). I would argue that there are multiple ways to observe even this data . Take a look below —

(1) Device x Gender x Type —

If you look at Table 1 (above) vs Table 2 (below), we can clearly see that the order of segmentation also matters. So, in the Table 1 above, Android male users have who are new & repeat both have similar CTRs of 15% but Android repeat users who are female have 61% CTR whereas male have 15% CTR. Now, this becomes an insight that you want to capture.

(2) Device x Type x Gender —

3) How many Total ways to slice & dice your data ?

From the above table, you can see that there are around 16 ways to slice data to gain insights. How do we get this 16 number ?

Out of 3 features , you can either pick =

pick 0 features and generate permutations = (3c0) x (0 !) = (3p0) = 1

pick 1 feature and generate permutations = (3c1) x (1 !) = (3p1) = 3

pick 2 features and generate permutations = (3c2) x (2 !) = (3p2) = 6

pick 3 features and generate permutations = (3c3) x (3 !) = (3p3) = 6

So, we get a total of 1 + 3 + 6 + 6 = 16 possible permutations.

So, to generalize if you have ’n’ features , the total number of possible ways to slice & dice data to obtain insights are

where npr and ncr mean the following in combinatorics,

Now, you can imagine how hard this must be especially if your number of attributes are large. For n = 5, you get 326 total possibilities and for n = 10, you get 9864101 total possibilities.

4) How do we humans decide data segments — whatever gives us more Information !

Before we understand Decision Trees, we need to understand 2 concepts — Shannon Entropy & Information Gain.

Understanding Shannon Entropy —

Shannon Entropy is nothing but the definition itself — the state of randomness in a variable.

Shannon Entropy of a variable basically tells the degree of randomness. So basically, the more random a variable state is, the higher is its entropy. So, if you have a class label with a high degree of imbalance, you would have a low entropy and consequently if you have a class label with very near class balance, you would have an entropy of 1.

Let’s understand this by taking 3 cases from the above is_click example —

“is_click” data :

Case 1 — [1 0 0 1 0 1 1 0 0 0] => CTR% = 40% , Entropy = 0.97

Case 2 — [1 0 1 1 1 0 0 1 1 1] => CTR% = 70%, Entropy = 0.88

Case 3— [1 1 1 1 0 0 1 1 1 1] => CTR% = 80%, Entropy = 0.72

As you can see above, the 1st case has a high degree of randomness and an even balance between both 0 and 1, thereby having the highest entropy and case 3 having the least entropy because of a higher imbalance than case 1 and case 2. So, the highest entropy is obtained = 1 at perfect class balance, whereas lowest entropy is obtained at highly imbalanced class irrespective of the class.

Understanding Information Gain —

To understand this, let me ask you a simple question —

We know that the overall CTR% in our dataset is about ~45%. Now, let us take our dilemma. Do we split by User Type or do we split by User Gender ? Let’s do both & check.

As you can see above, definitely there is more information you get when you split your data by gender compared to splitting your data by user type.

This in mathematical terms is nothing but Information Gain which intuitively is just that — how much information you are gaining when you decide to segment your data by a certain feature. So Now, it is clear that we need to decide our first point of split basis whatever maximizes our Information Gain which is nothing but the Entropy of the parent node subtracted with the weighted entropy of the child nodes.

5) Decision Tree to the Rescue

What decision tree does for you is it creates a branched tree structure where the most important split points where there is a higher information gained are split first followed by others. Few points to note —

The categorical variables have been converted to numeric —

Gender = {“male”, “female”} => is_male = {1, 0}

Type = {“new”, “repeat”} => is_repeat = {0,1}

Device = {“android”, “iphone”} => is_android = {1,0}

So, the original features Gender, Type, Device have been converted into is_male, is_repeat, is_android — with each value being either 1 (yes) or 0 (no). This is basically the one hot encoding process.

The decision tree looks something like this. This is constructed basis maximizing the overall information gain in the data set once we decide to segment it.

Let us understand the tree above in detail. Let us take 2 branches — (marking below with red & blue). Refer diagram below —

If we look at the Red Branch,

If you look above, the leaf node #4 has 96% labels as is_click = 1 and remaining 4% labels as is_click = 0. This branch is basically constructed as

{is_male ≤ 0.5, is_android ≤ 0.5, is_repeat > 0.5} which basically represents the user segment {Female, Iphone, Repeat}. So, this user segment has a 96% probability of click.

Now, let us look at the Blue Branch,

If you look above, the leaf node #11 has 82% labels as is_click = 1 and remaining 18% labels as is_click = 0. This branch is basically constructed as

{is_male > 0.5, is_android ≤ 0.5, is_repeat > 0.5} which basically represents the user segment {Male, Iphone, Repeat}. So, this user segment has a 82% probability of click.

So basically we can see that the leaf nodes in the decision tree constructed are the best possible user segments that we can obtain that gives us the maximum information once we decide to slice & dice the dataset.